Using Agentic AI to Build Production-Ready Terraform Modules

With the rapid rise of Agentic tools across companies, it’s time to focus on how we can work with them effectively. It is time to start building a collaborative environment, where we can get the best work out of these tools. Instead of fighting them, we can use these tools to handle the boring, painful, and often brain-numbing tasks we face daily.

One great example of this is something I worked on recently with the development of Terraform modules. This Terraform module environment in question was significant in its size, scope and had just a splash (ocean) of technical debt built into it.

With the expanding workload of clients being onboard into the platform these modules quickly became a pain point. While I previously wrote about resolving these issues through left-shifting compliance, the core development work still had to happen. This blog goes into how I resolved this pain point and reduced the development time from weeks, to a couple hours.

Defining a Terraform Module

What is a terraform module?

Well that might seem like a simple question to anyone versed in Terraform, so simple that practically every LLM on the market can produce “a” Terraform module in a couple of seconds. But, could you just take that module and deploy it into production?

Absolutely not! You know that the module produced wouldn’t just magically align itself to your security and compliance requirements. Luckily we are able to augment these Agentic tools with context to provide it with these guidelines, making sure that the code produced is up to the standard expected for a production module.

Well that seems easy enough, simply connect the Agentic tool via MCP to either your GitHub Repository or your Confluence, where you have a well maintained and updated guide for developers on what your company defines as a Terraform Module!

What do you mean you don’t have one! Well luckily we have an escape path.

Generating a Developer Guide

There is a saying that code is documentation for machines, which doesn’t really help us meat bags when we just have code, but if we dig deeper into the saying we can see our escape path from our mini-document-driven pit of despair. We have access to a machine that can read the code and turn it into documentation for us. While the machine generate documentation may need corrections, it is a powerful tool to make the unknown understandable and actionable.

So here is a simple rundown of the process I followed to turn the existing Terraform modules into human-readable (and AI readable) documentation:

- Identify existing “Production Grade” Modules.

If these don’t already exist this process falls on it’s face, you would be better off working with the tool to develop a guide from scratch.

In my case, these modules were all stored in a VCS (GitHub), which makes them easy to pull down. - Setup MCP server for connectivity to GitHub.

This step is optional, a solution could also be to clone the repository into a local folder and allowing the Agentic tool to read it from there.

If you aren’t sure what MCP is, here is a great rundown on the USB-C protocol for AI connectivity. - Work with the Agentic Agent to generate a Developer Guide.

This is the meat of the work, the tool reads the module code and creates the developer guide. - Use the Developer Guide to create or uplift modules.

Now the guide can be used, updated and then used again to keep all modules aligned to the changing business processes or requirements.

Turning Code into Documentation

Lets dig more into generating the guide, and have a look at the prompts used to produce the developer guide.

The initial prompt instructs the model to analyse the existing module, and turn it into a Developer Guide for that module.

1

2

3

4

5

Analyse the follow repository that contains a Terraform module.

Create a developer guide based on the provided repository that will be used to create or uplift Terraform modules in the future.

Store the developer guide as DEVELOPER_GUIDE.md.

Do not include features like the GitHub pipelines.

The repository is "org/repository".

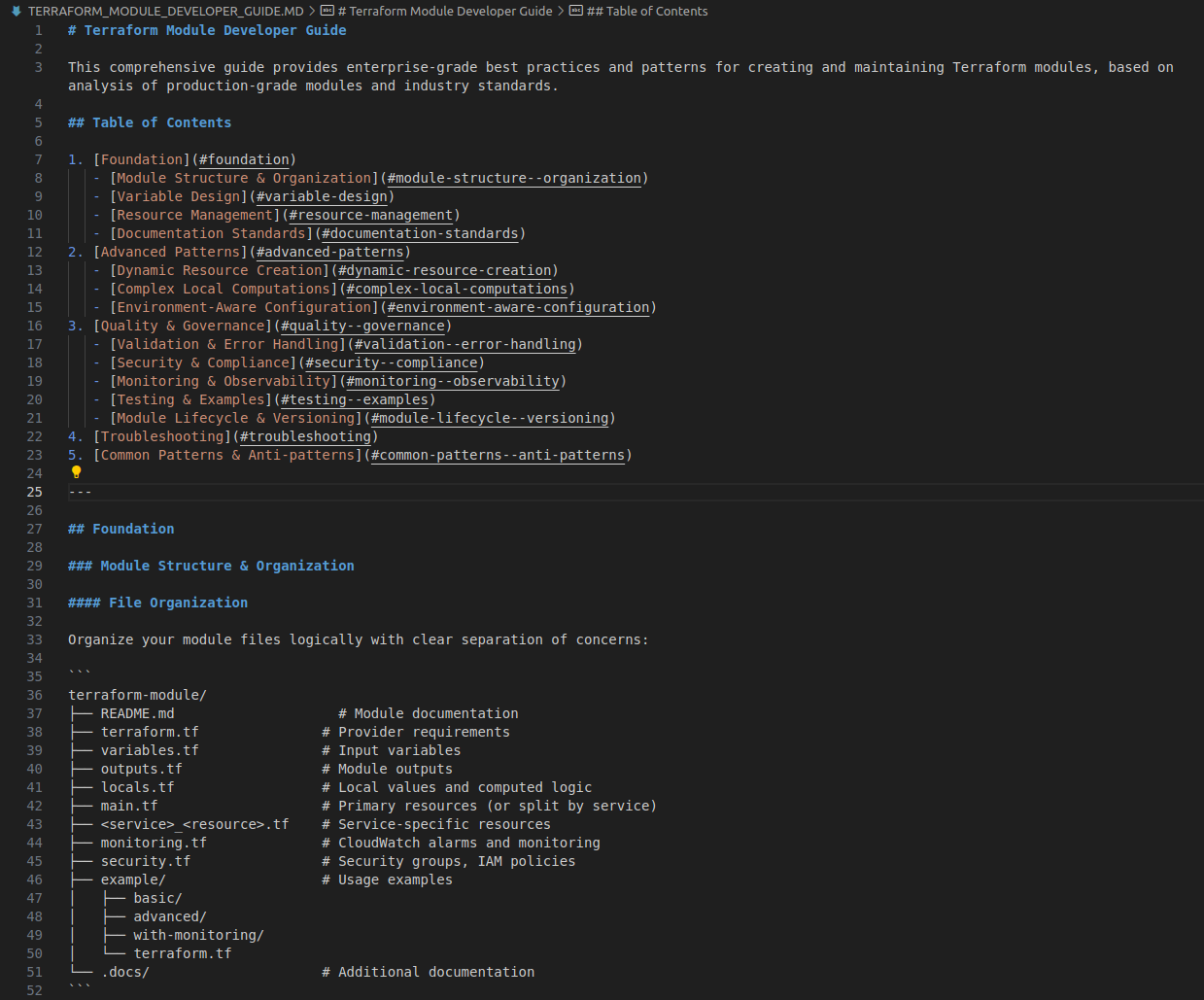

Short, simple and sweet. With a small prompt like the above you will get an open-ended response, like the layout of the guide being completely up to the model. If you have an existing layout or other requirements you want to include in the guide then it needs to be defined in the prompt. You can work on the developer guide and update this in the future but it is easy to correct in the first instance.

Guide Chaining

That prompt snippet can be reused to generate reports for several modules, allowing for something pretty powerful. The individual module developer guides can be combined into a generic “Terraform Module Guide” that contains all the requirements, defined and undefined, that exist in company for Terraform Modules. This is a form of task chaining, which I’ll call Guide Chaining.

The LLM is able to define the underlying requirements that may be hidden only in the coding patterns.

1

2

Combine the developer guides together into a master, generic "Terraform Module" Developer Guide.

Make sure to capture the underlying requirements.

And finally, perform a little bit of cleanup to reduce the amount of context when using the guide in the future.

1

Read the terraform module developer guide, remove any redundant content

With that, I now have a 1500 line developer guide that does a fantastic job in capturing the requirements for future and current Terraform modules.

Producing a Production Grade Module

Now that the guide has been created it is the single source of truth for developing Terraform modules. Any updated requirements or changes are made first to the document, the applied to the Terraform modules. Creating a new Terraform module has now been incredibly simplified. Instead of having to spend hours figuring out what is required I can get a boilerplate that requires minimal modifications.

The generated document can either be stored in a central VCS repository with other types of guides and retrieved by MCP again, or just copied to the folder and referenced by the prompt.

1

2

3

Using the developer guide, TERRAFORM_DEVELOPER_GUIDE.md, create a Terraform module for AWS Step Functions.

Follow the requirements outlined in the document, making sure to ensure compliance with the document.

If you have any question about the development, stop and ask the user.

Or, if uplifting an existing module:

1

2

3

Uplift the existing Terraform Module to to conform to the developer guide, TERRAFORM_DEVELOPER_GUIDE.md

Follow the requirements outlined in the document, making sure to ensure compliance with the document.

If you have any question about the development, stop and ask the user.

Now the process of developing or uplifting Terraform modules from a week of work and testing, to an afternoon of prompting (and still testing).

Final Thoughts

While this process worked well it does have a few issues. One major problem is the context, developing even a simple Terraform module like the one for Step Functions used over half the context. For more complex modules or uplifting complex modules you may that you’ll run out of context. An approach to fix this would be separating the developer guide into logical documents, aligned to the different sections in the core document (TESTING_AND_EXAMPLES, DYNAMIC_RESOURCE_CREATION and the like). Then the tasks to uplift can be split into smaller subtasks (Uplift testing in one task and the examples in another). As AI context gets larger this becomes less of a problem but is something we need to manage carefully for now.

The code itself still needs to be double-checked for consistency with the developer guide, especially during the initial development phase of the guide. Having compliance and security scanning built in to the PR process would assist greatly in capturing any problems before they are released to be consumed.