Don't be the Bottleneck

Being the bottleneck of any process is not fun for anyone involved, so why do cloud teams constantly position themselves directly in the path of cloud adoption? It is done for one word, the one word that sends shivers down any cloud engineer’s spine, no matter how seasoned they are….

Compliance

So, let’s talk about this. I will be coming at this topic from an AWS lens, but this same process/tools can be applied to all cloud providers and the new-age on-premises environment.

Setting the Stage

Sit back in your seat and imagine the following (or just have flashbacks to when something similar has happened in your organisation). The organisation has finally pulled the trigger on the move to the cloud, sick of the OPeX costs of maintaining their on-premise infrastructure or other drivers they may have. You set up SSO, an account factory, and pipelines to automate the creation of accounts for your domains, implementing SCP, RCP, and IAM policies to secure these accounts. You give the account over to the developers, and… they create a public S3 bucket, and all the data contained ends up in a torrent by the end of the working day. dizzy_face:. Not a fun time for anyone involved.

So, what do you do about it? Well, you could create a baseline module in your IaC tool of choice that sets up the best practice for S3 buckets. Fantastic! Now when a developer wants an S3 bucket, they can reference your module and get a secure bucket for them to do whatever they want with! But those developers are never happy, and they want to deploy a Windows EC2 instance (shock, horror!), so you go and build out a module for EC2 consumption. It works great, fantastic even, until another domain needs a Linux EC2 instance…

Even for a simple auto-scaled and load-balanced application, there needs to be 7 maintained modules!

And with that Linux EC2 instance request, you are now stuck in bottleneck hell! Every resource that your domains want to consume requires work by your team to build out the modules, and every use case that doesn’t fit will need, you guessed it, another module. Each module also has its own lifecycle that will need to be managed, and everything gets even more complicated when the modules are nested! Modules are really great when ensuring that all resources deployed to the cloud are secure because everyone will be bottlenecked by the speed of your team and will never be able to deploy workloads into the cloud!

Not really great when the cloud team is supposed to be enabling cloud adoption and delivery, instead of being the primary cause of it being abandoned. These kinds of wrapper modules may have a use case where a very small number of resources will be available to the developers, but this huge reduction in flexibility is not very cloud-friendly. This antipattern has been highlighted by Microsoft as something to avoid, so why do so many organisations fall back into it? We come back to that scary word again, compliance.

The Issue

Compliance: Can’t live with it, can’t live without it. But you can at least make living with it a lot less stressful and time-consuming. So how do we resolve the problem of providing developers access to the cloud and its resources in a flexible way while still keeping compliance in check and resources secure without becoming a bottleneck for cloud adoption? We shift the compliance left towards the developer!

If we take a look at where the compliance is currently being enforced in our scenario, it is sitting with the cloud platform team and takes a lot of time to implement each module just to get a baseline of functionality. That is without even factoring in keeping the modules updated with the frequent changes and improvements that cloud providers release.

So instead of it being on the head of the cloud platform team to enforce the policies through the modules, they can instead pivot to creating the policies that are enforced on the developers. This will require the developer creating the S3 bucket to ensure it is compliant with the cloud team’s policies. With this approach, the cloud platform usage can be scaled outside of the platform team and their, most likely, limited available resourcing.

Pre-Deployment

So, how can compliance be shifted onto the developers? There are several tools that can help, but first let’s look at the Policy-as-Code collection that can be incorporated into pull request pipelines to do static code scanning. A good baseline tool with a lot of rules built in would be Trivy, so let’s have a look at that!

Trivy is a collection of components that help manage compliance with vulnerability, policy-as-code, secret, and license scanners built into it. It is very easy to set up, with packages for all major OSes as well as Docker containers provided. The application does require internet access to download the “Checks Bundles” (this is what they call the bundle of policies and rules), but you can build a custom binary or container with the bundles pre-installed if required.

Trivy uses Open Policy Agent as part of its “Trivy OPA Engine” to provide the policy-as-code functionality. All the checks applied by default can be found in this GitHub repository. If there are compliance requirements outside what is provided by default, it is super simple to create custom checks and extend Trivy. Given that it uses OPA, these custom checks can be quickly written out in Rego, with there being existing repositories with a large number already ready to use. Check out the small example below that ensures an EC2 instance doesn’t have a public IP address.

package aws.ec2linux.m1

# Ensure that the EC2 instance has no public IP address associated

# Terraform policy resource link

# https://registry.terraform.io/providers/hashicorp/aws/latest/docs/resources/instance#associate_public_ip_address

# AWS link to policy definition/explanation

# https://aws.amazon.com/premiumsupport/knowledge-center/ec2-associate-static-public-ip/

deny[reason] {

resource := input.resource_changes[_]

resource.mode == "managed"

resource.type == "aws_instance"

data.utils.is_create_or_update(resource.change.actions)

not resource.change.after.associate_public_ip_address == false

reason := sprintf("AWS-EC2Linux-M-1: '%s' EC2 Linux instance should have 'associate_public_ip_address' parameter set to false", [resource.address])

}

So Trivy would be set up to run on a pull request into a branch, where it would scan the .tf files for any problems. If Trivy detects anything it doesn’t like, the pull request can either be denied outright or flagged for further investigation, and an exemption will need to be provided by the cloud team to continue. A very simple example, but this can be extended to cover a lot of compliance requirements. As the scanning and denial is done on pull requests, policy-as-code is incredibly scalable, enabling it to keep up with a growing organisation.

Post-Deployment

So what happens if your developers deploy some nice shiny infrastructure but, for some reason, they are having issues with, I don’t know, RDPing into an instance? So instead of going through the PR process, they just go in and create one quick 0.0.0.0/0 Security Group rule. Why developers have direct click-ops access to modify security groups is a discussion for another time, but this scenario is not far from what can happen currently.

How do you detect and remediate these problems? There are many tools, both paid and unpaid, for this function, from platforms like Wiz (which can also do code compliance and policy-as-code) and CloudConformity to more DIY solutions like SteamPipe/PowerPipe, CloudQuery, and CloudSpoilt. These tools fall under the category of Cloud Security Posture Management (CSPM). Cloud platforms also provide tooling to handle this, like AWS Config.

For most commercial uses, I would recommend going with a paid product like Wiz, which is a more complete end-to-end CSPM. But I am cheap, so open source and free is the way for me! This does mean more work is required to incorporate the CSPM into the lifecycle of the infrastructure development. After testing many of the OSS “CSPM” products, my go-to choice is the Cloudsploit program because of its ease of use and very short time to value.

Cloudsploit

Being a more complicated program, it is better if this section is broken out. Cloudsploit runs in the CLI and provides reports based on particular in-built compliance frameworks, like HIPAA, CIS, and PCI. If the in-built policies don’t cover your needs, then plugins can be used to extend the solution to cover specific compliance requirements.

Getting up and running is as simple as cloning the repository and running NPM install.

1

2

$ git clone [email protected]:cloudsploit/scans.git

$ npm install

Setting up the environment for the cloud provider and account of choice, use either config files or environment variables.

1

2

3

$ export AWS_ACCESS_KEY_ID=AKIAIOSFODNN7EXAMPLE

$ export AWS_SECRET_ACCESS_KEY=wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

$ export AWS_SESSION_TOKEN=IQoJb3JpZ2luX2VjEN7//////////wEaCXVzLWVhc3QtMSJIMEYCIQDyZo6rRqRTo2yMA7j0g9zUUXOQ+TSYvT1JkK30i164QIhANiSAei3CTmSCVnISxKyMPudFlbYayUUy8LyVuLfzUzKt8BAiEA///////////wEQRWySHL2hDlOczzL7PBlZBHzHciVk7JX6Vo6ZU3M0t0RymoB+AFaKpdWqQYUuh2G03tVrTnOIw3Qp6WXszRiGbQIhAMjMxNjY

And then launching Cloudsploit to scan and generate the report.

1

$ ./index.js --ignore-ok

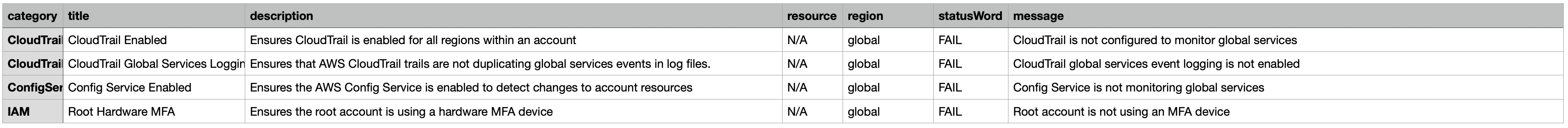

Checking through the output of a report on one of my sandbox accounts (don’t judge!) reveals the detections that it has picked up.

Where is the Value?

Best value is provided when it is used as a gate before moving up into higher environments. If there is an issue in the lower environments, it will need to be logged and/or resolved before the pull request into the higher environment is approved. Risk registers and the compliance reports provided from the tooling of choice go hand in hand for proving compliance with any particular framework.

While these tools are great and provide access to a lot of compliance right out of the box, sometimes they do fall short on delivery on the organisation’s requirements. In these cases, tools can be stitched together to provide overlap and security and ensure all aspects are covered. Something like utilising Wiz as the dragnet for 99% of compliance and the platform-specific tooling like AWS Config to pick up the stragglers. As long as the organisation has a well-defined framework around compliance (or is just following best practice from one of the many fantastic frameworks), there is very little that can’t be covered by combining both pre- and post-deployment compliance scanning.

That being said, outside of the major CSPM vendors, there is a bit more work required to set up the scanning and host the data returned from the scans. Whether there is a central account hosting the scanner or each individual account has its own would be up for internal discussion, with tools like Wiz removing the need to host your own infrastructure.

Shifting Left

As with security, compliance is best delivered in layers. Combining multiple tools ensures that coverage is at its best and that solutions to emerging issues or compliance requirements are easily adapted into your deployment flows. All cloud providers also have tooling that can also do post-deployment compliance, like AWS Config. Compliance is also more easily reported on when the policies are able to be strictly explained in a human-readable format, over trying to show that the same compliance exists in the mess that would be the module code.

There is one aspect of the deployment process that left-shifting the compliance doesn’t take care of, and that is the ease of access for unskilled developers. Many organisations try to sell a vending machine of sorts, providing infrastructure as a building block in a barely-any-code kind of way, enabling the organisation to not have to spend the time and money upskilling developers on the many cloud providers currently available. While I believe it is a necessity to upskill developers so they can build cloud-native solutions and better utilise the large number of resources available to them (you don’t want to end up with just shifting everything to EC2 instances!), There are some cases where upskilling won’t happen in time.

In these cases the cloud team can provide a selection of “modules” that represent a type of deployment, say a static website hosted on an S3 bucket, including S3 bucket, DNS, and CloudFront. These larger modules will have to be compliant with the other policies put in place but provide a vending machine approach until developers are able to upskill enough to write their own infrastructure code.

Another, possibly even easier route now that all the policies are codified, would be to offer an AI assistant to developers. The AI can either be fine-tuned on the policies or use RAG to fetch them and provide compliant code directly to the developers for use. This allows the vending machine to materialise in more of a Star Trek Replicator way, instead of a Coke from a Coke vending machine way (you can make anything with one and just get Coke with the other).